TensorFlow (5-1) RNN Basics

1. Introduction to Recurrent Neural Networks:

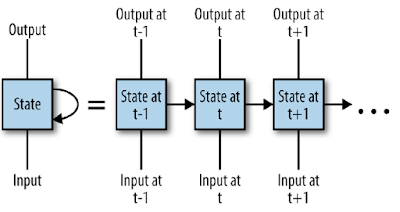

Recurrent neural networks (RNN) are powerful to process sequential data such as video, audio and natural language. The core concept of RNN is that each new element in the sequence contributes some new information, which updates the current state of the model.

2. State Vector:

When we receive a new information, the history/memory are not wiped out, but instead updated.

For example, if we ate a pizza yesterday, this memory is left in our mind and affects our decision in the future. Such memory is called state vector.

The figure illustrates how RNN updating with new information received over time. Each decision we make is depend on the the history of the sequence.

3. Vanilla RNN:

Vanilla RNN is similar to the neural networks we used. However, the only difference is that it passes the previous state to the neural networks and the result is depend on the sequence.

The equation of the Vanilla RNN is:

$$h_{t} = tanh(W_{x}x_{t}+W_{h}h_{t-1}+b)$$The range of hyperbolic tangent function is in [–1,1], where x and h are input and state vector.

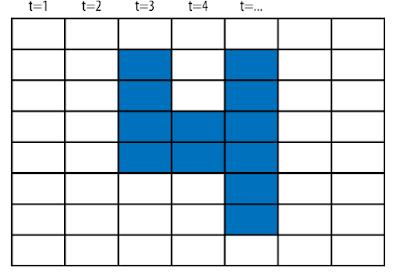

4. MNIST images as sequences:

The MNIST data are 28x28 images. A MNIST image can be treat as 28 sequential elements, each of 28 pixels.

Reference

[1] Tom Hope, Yehezkel S. Resheff, and Itay Lieder, Learning TensorFlow A Guide to Building Deep Learning Systems , O'Reilly Media (2017)

[2] Nikhil Buduma, Fundamentals of Deep Learning Designing Next-Generation Machine Intelligence Algorithms, O'Reilly Media (2017)

[3] Aurélien Géron, Hands-On Machine Learning with Scikit-Learn and TensorFlow Concepts, Tools, and Techniques to Build Intelligent Systems , O'Reilly Media (2017)

留言

張貼留言