TensorFlow (4-3) CNN of MNIST

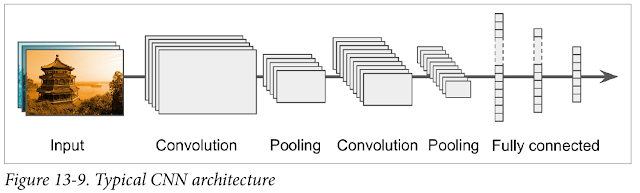

1. Recall the process of CNN:

在 4-1 我們討論過 CNN 的流程, CNN 的流程如下圖所示:

- 輸入圖片

- 捲積然後用 relu 輸出響應

- 池化 (選擇較重要的資訊)

- 重複 2 和 3

- 扁平化並連接神經元

- softmax 輸出機率

今天我們要參照這個流程實做 MNIST 的 CNN。

2. CNN Wrapper functions:

定義一些包裝過的函式,這樣在計算時可以提高可讀性,前篇有說明過 max_pool 和 conv2d 的用法。

#!/usr/bin/python3

import tensorflow as tf

import numpy as np

from tensorflow.examples.tutorials.mnist import input_data

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1], padding='SAME')

def conv_layer(input, shape):

W = weight_variable(shape)

b = bias_variable([shape[3]])

return tf.nn.relu(conv2d(input, W) + b)

def full_layer(input, size):

in_size = int(input.get_shape()[1])

W = weight_variable([in_size, size])

b = bias_variable([size])

return tf.matmul(input, W) + b

最重要是後面三個函式,我們會重複捲積和池化然後輸出到 full layer。

3. main process:

我們把整個計算分成三部份 computational_process, computational_targets and train:

1. computational_process: x 接收數筆 MNIST 資料,x_image 會把 MNIST 變成 28*28 的正方形圖片,以便之後的捲積。 第一層捲積的 filter 矩陣維度為 5*5*1*32,也就是 32 個 5*5*1 (H*W*D) 的 filter。 因為我們使用 padding='SAME' 模式,經過第一次捲積後輸出的維度為 -1*28*28*32,再經過 max_pool_2x2 運算後後維度為 -1*14*14*32。 第二層捲積的 filter 矩陣為 5*5*32*64,也就是 64 個 5*5*32 (H*W*D) 的 filter,經過 conv_layer 和 max_pool_2x2 運算後後維度為 -1*7*7*64。最後再連接到大小為 1024 的 full layer 上。

2. computational_tagets: 這邊和一般的神經網路差不多,定義了精準度和誤差。比較特別的是這邊選用 AdamOptimizer。

3. train: 訓練也和之前差不多,不過我們為了加速 CNN 的運行定義了 KEEP_PROB 這個參數。KEEP_PROB 是用來避免 over fitting,假設你的 entropy 刪減掉一些神經元時結果仍然差不多,我們就可以考慮丟棄一些神經元。

if __name__== "__main__":

with tf.name_scope("computational_process"):

x = tf.placeholder(tf.float32, shape=[None, 784])

y_true = tf.placeholder(tf.float32, shape=[None, 10])

x_image = tf.reshape(x, [-1, 28, 28, 1])

conv1 = conv_layer(x_image, shape=[5, 5, 1, 32])

conv1_pool = max_pool_2x2(conv1)

conv2 = conv_layer(conv1_pool, shape=[5, 5, 32, 64])

conv2_pool = max_pool_2x2(conv2)

conv2_flat = tf.reshape(conv2_pool, [-1, 7*7*64])

full_1 = tf.nn.relu(full_layer(conv2_flat, 1024))

keep_prob = tf.placeholder(tf.float32)

full1_drop = tf.nn.dropout(full_1, keep_prob=keep_prob)

y_conv = full_layer(full1_drop, 10)

with tf.name_scope("computational_targets"):

DATA_DIR = "data"

mnist = input_data.read_data_sets(DATA_DIR, one_hot=True)

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels=y_true, logits=y_conv))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv, 1), tf.argmax(y_true, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

with tf.name_scope("train"):

STEPS = 300

BATCH_SIZE = 50

KEEP_PROB = 0.8

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(STEPS):

batch = mnist.train.next_batch(BATCH_SIZE)

sess.run(train_step, feed_dict={x: batch[0], y_true: batch[1], keep_prob: KEEP_PROB})

if i % 30 == 0:

train_accuracy = sess.run(accuracy,

feed_dict={x: batch[0],

y_true: batch[1],

keep_prob: 1.0})

print("step {}, training accuracy {}".format(i, train_accuracy))

X = mnist.test.images.reshape(10, 1000, 784)

Y = mnist.test.labels.reshape(10, 1000, 10)

test_accuracy = np.mean([sess.run(accuracy,

feed_dict={x:X[i], y_true:Y[i], keep_prob:1.0})

for i in range(10)])

print ("test accuracy: {}".format(test_accuracy))

Reference

[1] Tom Hope, Yehezkel S. Resheff, and Itay Lieder, Learning TensorFlow A Guide to Building Deep Learning Systems , O'Reilly Media (2017)

[2] Nikhil Buduma, Fundamentals of Deep Learning Designing Next-Generation Machine Intelligence Algorithms, O'Reilly Media (2017)

[3] Aurélien Géron, Hands-On Machine Learning with Scikit-Learn and TensorFlow Concepts, Tools, and Techniques to Build Intelligent Systems , O'Reilly Media (2017)

留言

張貼留言